Fisent BizAI provides unapparelled accuracy or hallucinations by applying a variety of implementation techniques and design controls when using BizAI to digitize and interpret content. Some of these techniques include:

BizAI is a pioneer in the “Intelligent Content Processing” (ICP) solution category. ICP and OCR and IDP are often mistaken as “the same” or similar. While they do share a common purpose – to digitize content processing and enable automation – they are quite different. Here are some specifics as to the key differences business owners should be interested in.

BizAI’s full-optionality capabilities allow for Customers to easily select and apply any model / any host of their choice. This means Customers can utilize the best performant LLM, or one that they are most comfortable with for their use – and BizAI takes care of security, error handling, upgrades, etc. for you. Critical for all companies to consider is how to “future proof” their application of AI given the rapid evolution we are experiencing now. Fisent BizAI leverages, tests, and applies the latest and greatest Large Language Models (LLM’s) as new more capable options are released or as your use case needs evolve. This holds true even if it means using a customer's own model (BizAI supports Bring Your Own Model).

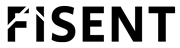

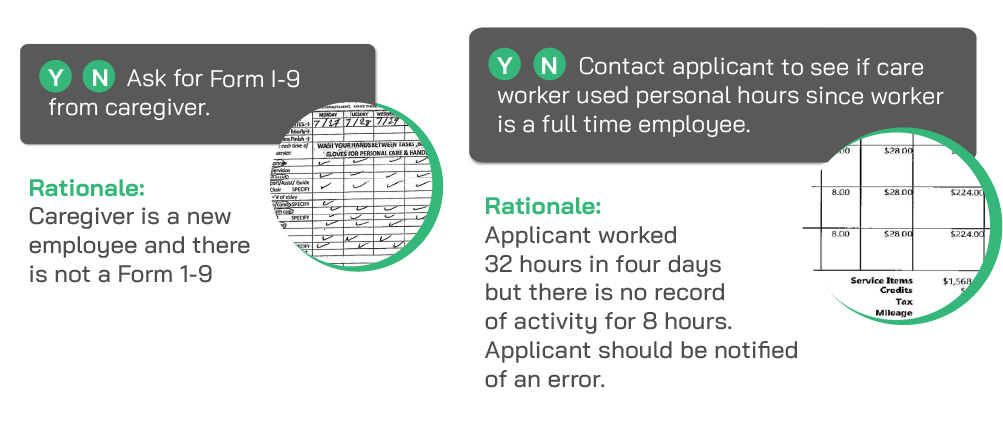

BizAI provides an entirely new level of transparency or auditability transforming your risk posture. As our customers apply BizAI to enable digital content processing – BizAI outputs a “rationale” with all AI generated responses. This means – anyone wanting to know “why did I get this response/answer?” can see what the AI reviewed/considered and ultimately concluded for every AI generated output. This is ground breaking, think about knowledge-based processing today – humans perform it – they don’t write down what they were thinking, what information they compared, what other sources they used to come to a conclusion – BizAI does!! This rationale is stored within a Customer’s application layer, allowing for easy review and auditing. Furthermore, historically, AI technology has been a “black box” where understanding responses required a highly specialized skill set, with BizAI we’ve made sure this is no longer the case.

BizAI has a zero-retention architecture, which means no customer data beyond simple API usage metrics (e.g., processing time, volumes, etc.) are persisted or retained. Fisent does not store customer parameters, outputs or inputs and does not train or fine tune models with customer data. Additionally, the enterprise LLMs that are utilized within BizAI do not train or persist any customer data. In other words, customers can process content without concern of their inputs or outputs being retained in any form. Customer data is only available or stored within their own application layer where BizAI returns the outputs/responses. Adding a little wrinkle in the above FAQ is what we call “memory”. Fisent (ONLY UPON CUSTOMER’S REQUEST) can apply a feature called “memory” to enable “enhanced content processing & retrieval”. BizAI for a specific use case will utilize previously processed outputs to enhance new use case processing at runtime – think of it like actual human memory – “I’ve seen this before – the answer looks something like this…” – again – ONLY AT CUSTOMER’S REQUEST.

Yes, BizAI undergoes regular penetration testing by a third party specialized security organization. Additionally, Fisent invests in multiple layers of test automation that is run daily and includes endpoint testing with a security focus. In addition, the surface area is restricted to a specified set of API endpoints supported by AWS API Gateway, a mature best-in-class security service.

Yes. While BizAI is built to enable full straight-through processing (STP), we understand that additional human validation may be required for some period of time. In fact, we strongly recommend that all of our customers start with HITL prior to moving to STP to ensure the outputs are meeting the needs of the business. HITL is typically built into the application layer where the operators are performing their day-to-day work. This allows a more integrated experience for the user and allows Fisent to gather feedback on the service which we use to tune the AI outputs where appropriate. Once the reliability of BizAI has been sufficiently validated in production, customers have the option to enable full straight-through processing.

Typical BizAI implementations range from 5-7 weeks in length. With accelerated implementation timelines available depending on the customer’s SDLC processes and resource availability. Typical implementation schedule:

What can BizAI do for your organization?

Enterprise-ready in days—no downtime. See your exact workflow in action with a free, customized BizAI demo.